The integration of immersive audio including Audio Description (AD) in 360° video content is a relevant step to enable low-sighted and blind people to enjoy the full experience. To achieve this goal, new ways of producing and delivering the audio content need to be tested and developed. Furthermore, it is key for a good end user experience to know what these users expect from 360° AD. Therefore, focus group tests were conducted to include their wishes in the development. Also, a previous ImAc post introduces some of the questions that arise for an Audio Description service in a 360° media environment (https://www.imacproject.eu/2017/12/04/immersive-accessibility-introducing-a-narrator-2/).

This article describes technical aspects for producing, delivering and consuming a “3D audio scene” including Audio Description (AD), based on these first deliberations and on the user requirements known so far.

Creating an immersive audio experience for 360° video content

One result of the ImAc focus group tests was that a major contribution to the user experience for the target group will come from an appropriate main audio mix rather than the AD service itself. Thus, not only the AD service is important, also the quality of the audio in general as well as the playback system will have a major impact on the immersive (audio) experience.

With that finding in mind we will look at the next generation audio (NGA) technologies and show where AD production can take advantage of them.

From a technical point of view, Audio Description can be seen as an additional audio asset and as such be handled similar to other audio sources. The following sections will outline advantages and disadvantages of different technical approaches.

Object based audio – A representation of the real world

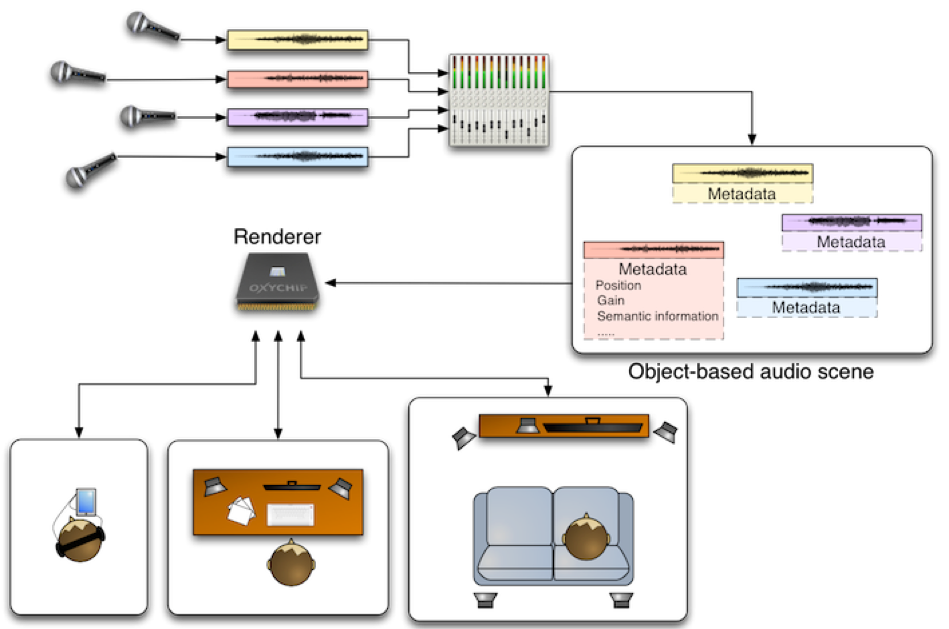

Object-based audio (OBA) is a revolutionary approach for creating and deploying interactive, personalized, scalable and immersive audio content.

An object-based production approach is able to overcome obstacles which are entailed in a standard channel-based audio production workflow. The term ‘object-based media’ has become commonly used to describe the representation of media content by a set of individual assets, together with metadata describing their relationships and associations. At the point of consumption these objects are assembled to create an overall user experience. The precise combination of objects can be flexible and responsive to user preferences, as well as environmental and platform specific factors.

The goal of OBA is to capture the creative intent of the producer and to carry as much information as possible from the production side to the end-user, thus ensuring the best reproduction possible on the consumer side. To achieve this, the produced audio scene is composed of several objects. The metadata associated with each object includes, but is not limited to, the target position of the audio signal, its target loudness and a description of its actual content.

Further information about object-based audio production can be read and heard here: https://lab.irt.de/demos/object-based-audio/

Summarizing, an OBA production can achieve the optimal experience for the end user: on a fitting playback device the resulting sound can come close to reality and offer the most immersive sound.

Formats and delivery

Today, an end to end OBA workflow cannot be provided at large scale. For one, OBA is very costly to produce. But more important, it cannot be brought to end user devices, because standards evolve only now and still lack a wider support. Existing OBA implementations are mostly demos, prototypes or other proprietary solutions.

In ImAc, it has been considered to deliver OBA up to the end user device (ImAc player). This would allow for high flexibility regarding the audio scene as well as the playback system, because the audio would be rendered on the end user device. However, relatively high processing power is needed to perform the client-side rendering. This is one of the reasons why the ImAc project investigates possibilities using different audio streams each fitted for a specific playback system. For example, this can be binaural audio, Ambisonics or 5.1.

On the production side however, OBA should preferably be used: from an object-based produced audio scene, any audio output format can be generated on demand, while always providing the best possible quality for the dedicated target playback system. The number and kind of formats that are generated depend on what playout channels are fed and can easily be extended if future delivery formats demand it.

Stereo and 5.1 productions can be represented by OBA as well, such that the same workflow can be obtained for any production. For instance, a stereo production would be imported into an audio scene by adding two audio objects – one for each channel. These objects are placed at the location where the loudspeakers would stand in a typical stereo setup. The same approach can be used for 5.1 or any other channel based production. That way, all typical State of the Art production formats can be supported in ImAc. This is relevant, since OBA productions are still rare.

The impact of the playback system

The immersive experience of the end user is not only influenced by the audio format itself, but also by the playback system. The way that the audio scene should be represented to the user, depends on the playback environment that is used. Basically, there are two different kinds of playback devices, that will be called “fixed” and “non-fixed”, in the following.

- A fixed playback device is not rotating or moving. To change the user’s viewing direction, another part of the video needs to be moved into the field of view (FOV). One could say that the video rotates around the user. The user doesn’t rotate but always looks in the same direction. Navigation is often done by mouse, arrow keys or swiping on a touch display.

- With a non-fixed playback device the user needs to look around (move his head) in order to change the FOV. One could say, that the video does not rotate around the user, but the user will turn to watch different areas of the 360° scene. This is the case for head mounted display devices.

It is assumed that the represented audio scene should align with the video for the best immersive experience. As a result, the kind of playback used defines in what way the audio scene must rotate around the user. Theoretically, both Loudspeaker setups or headphones can be used as playback devices, but the rendering process has to be dedicated to the actual system. This is simply because headphones move together with head movements, loudspeakers don’t. Accordingly, the rendering has to be adapted when a predictable and accurate representation of the audio scene is the target.

When using headphones, and when the type of playback device is “non-fixed” (as described above), the head movement and position is relevant for creating the audio scene. Simulating a room via headphones is a relatively common thing to do, but to realize a static (non-rotating) audio scene via a “rotating playback device” (headphones) cannot be done with standard consumer headphones. First, the viewing direction of the listener must be tracked and second, the audio signal must be rendered for this exact direction.

This example shows, that there are some challenges for audio delivery. At the end, audio must be prepared and delivered in a way that all user devices can play audio with an acceptable (spatial) immersion.

Summary

As stated above, AD tracks can be understood as an additional audio object – and that is exactly how we treat it in ImAc. Adding AD as a separate object to the scene, allows to vary parameters like volume or position of the AD speaker. You would like to hear the AD speaker as if he was sitting next to you? No problem. A few promising options were selected for testing, for example one where the speaker comes from the direction where the action is, as well as the traditional “omni-present” AD.

The different variations selected by the producer will be pre-rendered and delivered to the player. In the ImAc player, the user can choose his or her preferred AD representation. At the current stage, an ideal listening environment can only be implemented as a lab-demonstration, but users can already benefit from the advantages an OBA production just using their headphones.